What is a disaster recovery plan (DRP)?

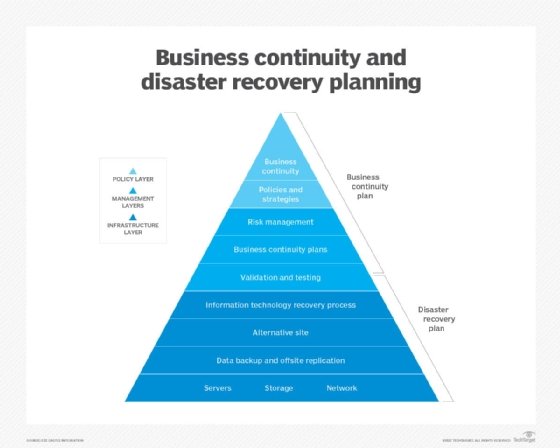

A disaster recovery plan (DRP) is a documented, structured approach that describes how an organization can quickly resume operations after an unplanned incident. A DRP is an essential part of a business continuity plan (BCP). It's applied to the aspects of an organization that depend on a functioning IT infrastructure. A DRP aims to help an organization resolve data loss and recover system functionality to perform in the aftermath of an incident, even if systems function at a minimal level.

The plan consists of steps to minimize the effects of a disaster so the organization can continue to operate or quickly resume mission-critical functions. Typically, a DRP involves an analysis of business processes and continuity needs. Before generating a detailed plan, an organization often performs a business impact analysis (BIA) and risk analysis, and it establishes recovery objectives such as a recovery point objective (RPO) and recovery time objective (RTO).

As cybercrime and security breaches become more sophisticated, organizations must define their data recovery and protection strategies. Mitigating incidents before they occur and addressing them quickly when they do happen reduces downtime and minimizes financial, legal and reputational damages. DRPs also help organizations meet compliance requirements while providing a clear roadmap to rapid recovery.

Brief history of DRP

DRP has evolved significantly over the years and has been shaped by various factors, such as technological progress, regulatory demands and the rise of cloud computing.

- Late 1970s. Businesses started relying on computer information systems. This led to the development of enterprise DRPs that relied on the use of duplicate systems or data center sites to provide various response levels. A hot business continuity option responded immediately but was also expensive. Other option levels were developed, including a warm option, which provided a fast response but not as fast as the hot option, and a cold option, which was delayed and least expensive.

- 1983. A crucial step toward formalizing disaster recovery planning was taken when U.S. legislation required national banks to create verifiable backup plans. Other industries followed soon after, spawning the disaster recovery industry that's recognized today. Early disaster recovery efforts centered on data backup and recovery technologies. They recognized that a business can treat system availability and disaster recovery responses differently for different systems and applications.

- Early 1990s. The Disaster Recovery Institute expanded its flagship certification from disaster recovery to business continuity, acknowledging the increasing importance of comprehensive planning that goes beyond IT systems. Businesses further refined their interpretation of the data center infrastructure and recognized the value of a tiered structure that identified systems with business and disaster recovery importance.

- 2000s and 2010s. The broad prevalence of business computing amplified the importance of business continuity and DRP as businesses sought to recover faster and with less data loss. New technologies, such as virtualization, and rapidly increasing network bandwidth supported this increased focus on data protection and recovery. The emergence of remote computing, such as colocation and cloud computing, offered new opportunities for off-site storage and computing capabilities.

- Present. Businesses are using cloud services and computing to delegate their DRPs to third-party providers, leading to the development of disaster recovery as a service (DRaaS). This approach accommodates business growth and offers recovery time, flexibility and cost advantages. However, the increasing use of third-party computing and disaster recovery providers is spawning new customer demand for vendor security and compliance standards. Strong auditability has also risen in importance to ensure that outside providers can safeguard sensitive and critical data, as well as the business itself.

What is considered a disaster?

A disaster is an event that severely affects a business or organization's normal operations. Disasters can encompass a range of events, including natural phenomena, such as earthquakes and floods, as well as man-made incidents, such as cyberattacks and industrial accidents.

Some types of disasters that affect business IT operations include the following:

- Critical application failures or defects, such as a software bug.

- Network communication failures or misconfigurations.

- Utility power outages.

- Natural disasters, such as wildfires and earthquakes.

- Malware and other cyberattacks.

- Transportation accidents.

- Data center facility disasters.

- Campus disasters.

- Citywide disasters.

- Regional disasters.

- National disasters.

- Multinational disasters.

Businesses also have to grapple with other types of challenging situations, such as intellectual property or product liability lawsuits; declining sales and cash flow; and business fraud or other malfeasance. However, these types of business "disasters" aren't typically included in DRP preparations.

Recovery plan considerations

When disaster strikes, the recovery strategy starts at the business level to determine which applications and services are most important to running the organization. The RTO describes the amount of time-critical applications can be down, typically measured in hours, minutes or seconds. The RPO describes the age of files that must be recovered from data backup storage for normal operations to resume.

Recovery strategies define an organization's plans for responding to an incident, while disaster recovery plans describe how the organization should respond. Recovery plans are derived from recovery strategies.

When determining a recovery strategy, organizations should consider budget, insurance coverage, the people involved, physical facilities, management's position on risks, technology needed, data and data storage, suppliers, compliance and other regulatory requirements.

Management approval of recovery strategies is important. All strategies should align with the organization's goals. Once disaster recovery strategies have been developed and approved, they can be translated into disaster recovery plans.

Types of disaster recovery plans

DRPs are usually tailored for a specific environment. Types of plans include the following:

- Backup and restoration plan. This is the most fundamental type of DRP. It generally involves periodic backups of data and applications to suitable targets. Backup targets are traditionally media such as tapes and storage subsystems, but they can also include synchronization with full standby systems. Restoration procedures should be documented and regularly tested to ensure backup media is available, restorable and will work when needed.

- Virtualized disaster recovery plan. Virtualization gives organizations opportunities to execute disaster recovery more efficiently and easily. A virtualized environment can spin up new virtual machine (VM) instances within minutes and provide application recovery through high availability (HA). Testing is also easier, but the plan must validate that applications can be run in disaster recovery mode and returned to normal operations within the RPO and RTO.

- Network disaster recovery plan. Developing a plan for recovering a network becomes more complicated as the network's complexity increases. It's important to provide a detailed, step-by-step recovery procedure, test it and keep it updated. The plan should include information specific to the network, such as its performance, connectivity technology and networking staff.

- Cloud disaster recovery plan. Cloud disaster recovery can range from file backup procedures in the cloud to complete replication. It can be space-, time- and cost-efficient, but maintaining the disaster recovery plan requires proper management. The manager must know the location of physical and virtual servers. The plan must address security, a common issue in the cloud that can be alleviated through testing. Proper documentation and strong cross-team collaboration are critical for cloud DRPs.

- Data center disaster recovery plan. This type of plan focuses exclusively on the data center facility and infrastructure, as well as colocation sites. An operational risk assessment is a key part of a data center DRP. It analyzes key components, such as building location, power systems and protection, security and office space. The plan must address a range of possible scenarios.

- DRaaS. These services are the commercial adaptation of cloud-based disaster recovery. In this model, a third-party service provider replicates and hosts an organization's physical and VMs. Governed by a service-level agreement, the provider executes the disaster recovery plan during emergencies.

- HA. Advanced DRPs can adopt HA technologies, such as redundant systems and storage, which can keep applications and services running in the event of disruption. Ideally, users never realize that an incident has occurred. More modest HA plans rely on hot or warm sites and even cloud deployments that can be invoked when issues arise. HA plans should be tested and validated periodically to ensure that redundant systems and services are resilient and provide the expected level of availability.

Scope and objectives of DR planning

The main objective of a DRP is to minimize the negative effects of an incident on business operations. A recovery plan can range in scope from basic to comprehensive. Some DRPs are hundreds of pages long.

Disaster recovery budgets vary and fluctuate over time, affecting the technologies and practices deployed for disaster preparedness. Organizations can take advantage of free resources, such as online DRP templates, including TechTarget's Business Continuity Test Template.

Several organizations, including the Business Continuity Institute and Disaster Recovery Institute International, also provide free information and online content.

An IT disaster recovery plan checklist typically includes the following:

- The scope of the recovery in terms of the range or extent of necessary treatment and activity.

- Relevant system and network infrastructure documents.

- A list of the most serious threats and vulnerabilities, as well as the most critical assets.

- Staff members responsible for those systems and networks.

- RTO and RPO information.

- Disaster recovery sites, such as hot sites, warm sites, cold sites, cloud computing resources and backup storage resources.

- The history of unplanned incidents and outages, as well as how they were handled and remediated.

- Current disaster recovery procedures and strategies.

- The incident response team.

- Steps to restart, reconfigure and recover systems and networks.

- Other emergency steps required in the event of a disaster, such as a police or fire response depending on the nature and scope of the disaster.

- How management reviews and approves the DRP.

- How the plan is to be regularly tested.

- How DRP and BCP audits are to be conducted and the plan regularly updated.

The disaster recovery site's location must be considered in a DRP. Distance is an important, but often overlooked, element of the DRP process. An off-site location that's close to the primary data center might seem ideal in terms of cost, convenience, bandwidth and testing. However, outages differ greatly in scope. A severe regional event can destroy the primary data center and its disaster recovery site if the two are located too close together.

The reality of disaster recovery is that an organization must strike a balance between capability and cost. A business might spend enormous amounts of money and talent on disaster preparedness. That comes at a cost, which must be weighed against the benefit to the business. For example, continuous data protection technologies offer strong RPO and RTO benefits, but the cost of CDP isn't appropriate for all applications and business services.

How to build a disaster recovery plan

The DRP process involves more than writing the document. Before creating the DRP, a risk analysis and business impact analysis must be done to determine where to focus and implement resources during the disaster recovery process.

Typically, the following steps are involved in creating a DRP:

- Conduct a BIA. The BIA identifies the effects of disruptive events and is the starting point for identifying risk within the context of disaster recovery. It also generates the RTO and RPO.

- Create a risk analysis. The risk analysis identifies threats and vulnerabilities that could disrupt the operation of systems and processes highlighted in the BIA. It assesses the likelihood of a disruptive event and outlines its potential severity.

- Develop a goals statement. A goals statement delineates the objectives an organization aims to accomplish during and after a disaster, encompassing both the RTO and RPO. Goals identify risks the DRP intends to address and correlate to the risk analysis. Risks that are too costly, unlikely to happen or would have minimal effect are often omitted.

- Identify the DRP team. Disaster recovery plans are living documents. Involving employees from management to entry level increases the plan's value. The DRP should identify the individuals tasked with executing it and include measures to use in the absence of key personnel.

- Take inventory of IT. Create an IT inventory list that includes each item's cost, model, serial number, manufacturer and whether it's rented or owned. Modern change management and data center infrastructure management platforms will typically gather and retain such information, simplifying and accelerating the asset management process.

- Create an internal communication strategy. The communication plan details how both internal and external crisis communication is handled. Internal communication includes alerts that can be sent using email, overhead building paging systems, voice messages and text messages to mobile devices. Examples of internal communication include instructions to evacuate the building and meet at designated places, updates on the progress of the situation and notices when it's safe to return to the building.

- Create an external communication strategy. External communications are even more essential to the BCP and include instructions on how to notify family members in the case of injury or death; how to inform and update key clients and stakeholders on the status of the disaster; how to coordinate disaster responses with local, state, and federal officials; and how to discuss disasters with the media.

- Develop a data backup, recovery and redundancy plan. A detailed plan for data backup, system recovery and restoration of operations should be mandated. The plan highlights redundancy and failover mechanisms for critical infrastructure and systems with HA capabilities. Modern plans should include careful attention to security and compliance requirements to ensure that all computing and data resources in the plan protect sensitive or personally identifiable information.

- Test the plan. The DRP should be regularly tested to pinpoint vulnerabilities and identify potential areas of improvement, such as updating or introducing new technologies to automate or accelerate recovery processes. Training should be conducted as part of testing to familiarize employees with their roles and responsibilities when dealing with a disaster.

- Regularly review and revise the plan. The disaster recovery plan should be consistently reviewed and revised to account for changes in business technology, legislation, operational procedures, system deployments and potential risk factors or threats.

Disaster recovery plan template

It's common for disaster recovery management teams to use a template to design a DRP. A template asks the questions and provides the guidance practitioners need to develop a comprehensive plan. Practitioners can add or omit sections and details to suit the organization's needs and goals.

Most DRP templates begin with planners summarizing critical action steps and providing a list of important contact information. This arrangement makes the most essential information quickly and easily accessible.

The templates then have the planning group define the roles and responsibilities of disaster recovery team members and outline the criteria to launch the plan into action. The plan should specify, in detail, the incident response and recovery activities. Once the template is prepared, it's recommended that it be stored in a safe and accessible off-site location.

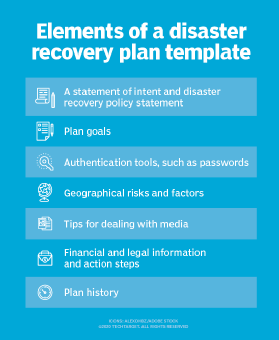

Other important elements of a disaster recovery plan template include the following:

- Statement of intent and DR policy statement.

- Plan goals.

- Authentication tools, such as passwords.

- Geographical risks and factors.

- Tips for dealing with media.

- Financial and legal information and action steps.

- Plan history.

Testing your disaster recovery plan

DRPs are validated through testing to identify deficiencies and gaps in protection, and to give organizations the opportunity to fix problems before a disaster occurs. Testing offers proof that the emergency response plan is effective and hits RPOs and RTOs. Since IT systems and technologies are constantly changing, disaster recovery testing also ensures a DRP is up to date.

Reasons given for not testing DRPs include budget restrictions, resource constraints and a lack of management approval. Testing takes time, resources and planning, and it can be risky if the test uses live data.

Disaster recovery testing varies in complexity. Typically, there are four ways to do it:

- Plan review. A plan review includes a detailed discussion of the DRP and looks for missing elements and inconsistencies. This effort is central to regular plan evaluations and updates.

- Tabletop exercise. In a tabletop test, participants walk through disaster scenarios and planned activities step by step to demonstrate whether disaster recovery team members know their duties in an emergency. It helps identify gaps in the DRP and understand how different stakeholders would respond to the situation. This is a perfect opportunity for team members to communicate and collaborate on disaster recovery process and practice.

- Parallel testing. Parallel testing involves running both the primary system and the backup or recovery system simultaneously to compare their performance and ensure the effectiveness of the backup system. This test is a simulation without a failover, letting organizations assess whether the backup system can handle the workload and maintain data integrity while the primary system is still operational.

- Simulation testing. A simulation test uses resources such as recovery sites and backup systems in what's essentially a full-scale test. This is often an actual failover where the production infrastructure is shifted to disaster recovery resources. Different disaster scenarios are simulated within a controlled environment to verify the effectiveness of the DRP and to gauge how fast an organization can resume business operations after a disaster.

Incident management plan vs. disaster recovery plan

An incident management plan (IMP) should be incorporated into the DRP; together, the two create a comprehensive data protection strategy. The goal of both plans is to minimize the negative effects of an unexpected incident, recover from it and return the organization to its normal production levels as quickly as possible. However, IMPs and DRPs aren't the same.

The major difference between an IMP and DRP are their primary objectives:

- An IMP focuses on protecting sensitive data during an event and defines the scope of actions to be taken during the incident, including the specific roles and responsibilities of the incident response team.

- The goal of a DRP is to minimize the effects of an unexpected incident, recover from it and return the organization to its normal business operations as fast as possible.

- An IMP is an organized response to security incidents that involve detection, analysis, containment, eradication and recovery procedures. It identifies the most likely threats and documents steps to prevent them. A DRP focuses on defining the recovery objectives and the steps that must be taken to bring the organization back to an operational state after an incident occurs.

- An IMP focuses on how a business will detect and manage a cyberattack to reduce potential damage and consequences to the business.

- A DRP addresses the bigger questions surrounding a potential cyberattack, identifying how the business will recover and resume normal work operations after a catastrophe or security incident.

Examples of a disaster recovery plan

An organization can use a DRP response for various situations. The following are examples of specific scenarios and the corresponding actions outlined in a DRP:

Example 1. Data center failure

Scenario: A data center experiences a power outage or hardware failure.

Response:

- Activate backup generators to ensure continuous power supply.

- Initiate a failover to redundant systems or secondary data centers.

- Restore data from backups stored offsite or in the cloud.

- Communicate with stakeholders about the status of the situation and expected recovery time.

Example 2. Cyberattack

Scenario: A ransomware attack encrypts critical systems and data of an organization.

Response:

- Isolate affected systems to prevent further spread of the attack.

- Engage cybersecurity experts to identify and mitigate the source of the attack.

- Restore systems from clean backups to minimize data loss and downtime.

- Incorporate additional security measures to prevent future attacks.

Example 3. Human error or accidental data loss

Scenario: An employee inadvertently deletes important files or database records.

Response:

- Immediately stop any ongoing operations that could exacerbate the problem.

- Attempt to recover the deleted data from backups or shadow copies.

- Use data recovery tools or services to retrieve lost information if necessary.

- Review access controls and permissions to minimize the risk of similar incidents in the future.

Explore essential disaster recovery practices for businesses and learn how to prepare for small- and large-scale disruptions and emergencies.